The problem with noise

Ray tracing, like photography, needs a lot of light samples to get a clear image, and in both cases noise is always a challenge. In photography, when there’s not enough light, the samples are sparse and the overall image will be grainy. The same is true for ray tracing and CG. If you ray trace for only a short time, the samples are limited and the image will be noisy. In both cases, the solution is to allow for more samples. To get more samples in photography, you can open the aperture or increase the exposure time to let in more photons. In ray tracing, you can either wait longer to calculate more samples, or add more computing power to resolve the image faster.

Another thing that can help with noise in an image is called denoising. The simplest denoising solution is to blur all the nearby pixels to get an average. But the result will be just an overall blurry image. If the denoising solution is also able to detect edges and make sure they remain sharp, the result will be better. For denoising to improve beyond this is a more difficult problem to solve.

In the example below, we start with a noisy render that has only a few samples. The second image shows what happens when you apply a simple blur, and the third image shows what happens when you detect edges and blur at the same time. For these images, we used Photoshop’s Smart Blur filter. Technically, Photoshop’s denoiser would work far better than Smart Blur, but this helps illustrate the point.

Original noisy image

Image with gaussian blur in Photoshop

Image with photoshop smartblur using edge detection

In V-Ray 3.x we introduced our own denoising solution. It allows the user to render an image up to a certain point and then let V-Ray denoise it based on the info it has. This process runs very well on GPUs. One thing we mention in our Guide to GPU is that GPUs are excellent at massively parallel tasks. And denoising is one of those tasks. With GPUs, we get about a 20x speed boost, and the process can finish in just a few seconds.

But it could be faster. What if, instead of solving the denoising problem independently for each image, it could reference back to past denoising solutions to solve the problem faster?

Using neural networks to help denoising

Using this previously “learned” data is the basis of machine learning. In V-Ray, it can use the data learned during the light cache pass to help solve a variety of rendering problems much faster. For example, the Adaptive Sampler, Adaptive Lights and new Adaptive Dome Light all use this concept. But what if V-Ray could learn from other renderings too, not just the one it’s working on?

Right now, there’s a lot of buzz surrounding the topics of Deep Learning and Deep Neural Networks. (Really they’re the same thing). How deep the neural network is simply refers to the number of layers that the network contains. The idea is to build a computational network that learns how to solve specific problems, either from provided solutions to the problem, or by learning from it’s own tests. Once the network better understands how to solve a problem, such as denoising, it can solve it much faster.

Imagine if you didn’t know that 5+5=10, and you had to count on your fingers each time, it would be a much slower solution. But because you already know the answer, you can skip counting on your fingers, making it much faster.

In theory, by feeding the neural network thousands of different noisy renders along with the clean final versions, it could learn how to solve the noise problem using this image data, and then apply the solution to other cases.

That’s exactly what NVIDIA has introduced with their OptiX AI-accelerated denoiser. They built a neural network using thousands of images rendered in Iray, and this learned data can now be applied to other ray traced images. We decided to experiment with how this learned data might benefit V-Ray.

It’s all about speed

What’s the advantage of NVIDIA’s OptiX denoiser over V-Ray’s denoiser? While the V-Ray denoiser is very fast and can denoise an image in seconds on a GPU, the OptiX solution can denoise a render in real-time. But let’s keep in mind that a denoised image is never quite going to be accurate. By definition, it gives you the best guess for what it thinks the final image should be. At the same time, accuracy may not be the most important thing. If you can get a workable noise-free image in real-time, it could have an impact on your workflow, especially during lighting and look development.

How the NVIDIA OptiX denoiser works in V-Ray

It’s possible to use the learned data with V-Ray, even though the information was gathered using Iray renders. We could even retrain the network using V-Ray renders.

The more “real” information the denoiser knows about the image, as opposed to guessing, the better it can do its job. For example, let’s look at edge detection. Because edges are generally detected based on high contrast between neighboring pixels, a noisy image may not have enough information to detect the edges well. When you render a diffuse pass and a normals pass in V-Ray, it gathers enough information about a scene to determine where the edges are.

With the combination of learned data and render elements, the OptiX denoiser can give you a very good prediction of the final image, even with only a few samples. While this type of denoising will work on GPUs or CPUs, the biggest benefit for the user is when working interactively.

Some example results

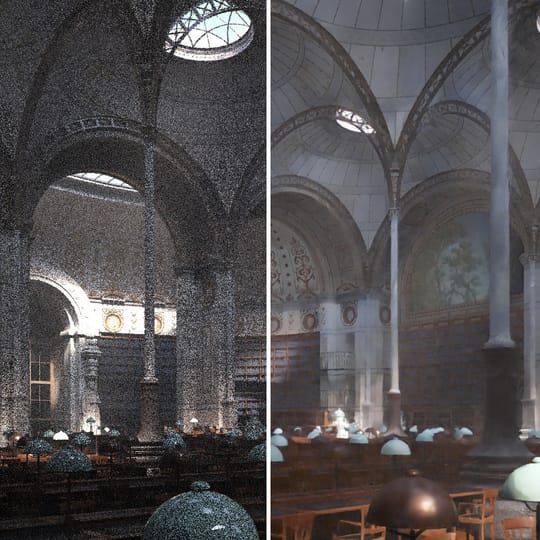

In this example we are looking at a fairly complex scene with a lot of Global Illumination. We used both the diffuse and normal pass as part of the denoiser. We took snapshots during the render process to show both the original render and the denoised one.

You will notice that at pass 1, we get an almost usable image with very little information. The image however, is not very close to the actual lighting of the final images. But as we get closer to the final image, they are much closer. However, you will notice that there are still differences between the final image and the final image denosied.

In this video we get to see the true power of the real-time denoising that can be achieved with NVIDIA's OptiX. Looking at several scenes, the user get a noise free image as the render progresses.

Current limitations

There are a few limitations that may be resolved in the future.

- The OptiX denoised image is only an approximation. It may be a very close guess to the final result, but an actual rendering will be more accurate.

- OptiX denoised images are clamped. Currently, the denoised image is clamped at 1, and you won’t get the same range as an HDRI.

- OptiX denoising refreshes after each V-Ray pass. V-Ray's progressive rendering is done in passes. In simple scenes, passes can take milliseconds and in more complex scenes, passes can take minutes. With the OptiX denoiser you’ll only see the denoised result after each pass.

- OptiX denoising is very good during the early stages of rendering when there’s more noise. In later stages with less noise, OptiX denoising may be less advantageous.

- OptiX may not be ideal for animation. For animation we suggest using V-Ray’s denoiser with cross-frame denoising.

- OptiX only denoises the beauty render, while the upcoming V-Ray Next can denoise individual render elements.

- When using V-Ray’s CPU renderer, you’ll need to turn off the anti-aliasing filter for the OptiX denoiser to work.

- While V-Ray rendering can take place on any hardware, the OptiX denoiser requires NVIDIA GPUs.

Conclusion

Many people have been using V-Ray in interactive mode for a long time. It is a great way to update shaders and lighting and see the results update themselves interactively. The issue has always been that, based on the nature of progressive path tracing, the image can be fairly noisy to start. With the new OptiX denoiser from NVIDIA inside V-Ray, realtime, smart denoising can take place giving users a better sense of what their changes will be in a smoother noise free render.

The OptiX denoiser is available for people to try in the V-Ray Next for 3dsmax Beta.